There is a type of guy in this world who insists that ChatGPT is about to come to life and kill everybody like the villain in a science fiction film. Some of these guys genuinely believe this, I'm sure. Most of them are selling something, but some of them are just stupid.

I think this is pretty ridiculous, but I approached aisafety.info with an open heart. Perhaps they can convince me! Perhaps there is some information I am unaware of; there's a lot going on with learning computers that I don't really know about, after all, and this is a website which is set up to specifically make a case. They want to convince me! I'm sure they will put their best foot forward.

Alas, reader, I remain unconvinced that a pretty good chatbot is on the verge of coming to life and killing me. I thought it would be interesting to pick at these pieces of writing, though. Swish it around in my mouth and then spit it into the bucket like wine.

Here is the particular thing which I will be discussing. It's a case for AI safety!

A case for AI safety has a little disclaimer at the top:

This section paints an intuitive picture to illustrate the risk of smarter-than-human AI causing human extinction. For a more systematic introduction, see our Intro to AI safety.

What do they mean by that, you may wonder. They mean that this contains no real information and is designed to evoke an emotional response that overrides the reasoning and thinking parts of your brain so that they don't need to provide any actual information. But, you see, they're clever clogs and fully aware of that! They additionally smile knowingly at you so that you know they're being honest; this one is the intuitive one, but don't worry. They have a "more systematic" version elsewhere. They're men of science, after all! They can be trusted! (The "more systematic" version is longer, but pretty much the same.)

The piece opens with a bombastic first header!

Smarter-than-human AI may come soon and could lead to human extinction

Whoa! That's so scary! Holy shit! The reader is immediately alert: there is a huge problem! Oh my god!

This header of course contains nothing more true than:Everything may soon be made of pudding and this could lead to complete societal collapse.

It could happen! It's not impossible that this could happen. You can't say with absolute 100% certainty that the world won't be made of pudding in 5 years.

Companies are racing to build smarter-than-human AI. Experts think they may succeed in the next decade. But rather than building AI, they’re growing it — and nobody knows how the resulting systems work. Experts argue over whether we’ll lose control of them, and whether that will lead to our demise. And while AI extinction risk is now being discussed by decision makers, humanity hasn't gotten its act together to ensure survival.

Oh no!!!!!!!!! That's so scary!!!!!!!!!!!! We'd better look at this one piece at a time, since it's so important and scary.

Companies are racing to build smarter-than-human AI.

What companies? What does "smarter than human" mean? Don't worry about it. Smart by what metric? Don't worry about it. They're racing to build it. Are they succeeding? No.

Experts think they may succeed in the next decade.

(bold mine)What experts? Don't worry about it. These experts think, they suppose, that maybe the unspecified "companies" might succeed at this undefined task ("smarter than human") at an unspecified time in the future. Why do they think that? Don't worry about it.

But rather than building AI, they’re growing it — and nobody knows how the resulting systems work.

Damn, that's crazy! Nobody knows how AI systems work? That seems pretty embarrassing for the "experts," but alright.

This is a pretty important assertion; not because it's spooooooky, which is what you're meant to take away from this assertion (you're meant to think, my god, maybe it's already alive and we don't even know! That's so freaky!!!

I am not scared of the computer or already convinced, so I am taking this as an assertion of fact. This is an assertion of fact which gives us two options:

- "Nobody knows how the systems work" is false. This article is lying, exagerrating, or misinformed.

- "Nobody knows how the systems work" is true. Any people who claim to know how the systems work are lying, exagerrating, or misinformed, and their predictions, opinions, and speculation are based purely on their imaginations.

For now, let's assume that this piece is not lying to us and accept that this is true. Nobody knows how AI works, and anyone who says they do is wrong.

Experts argue over whether we’ll lose control of them, and whether that will lead to our demise.

One sentence later we already encounter some people claiming to be experts on a system nobody understands! Since the experts don't know how the systems work, what they're arguing isn't granted any weight at all by their expert status. What are they experts in? Things besides AI, one must assume, or types of AI not related to the type at hand.

And while AI extinction risk is now being discussed by decision makers,

"Decision makers" here is, I think, meant to imply "serious high-up political guys" – another appeal to authority – without actually specifying who so as not to alienate any particular reader. A cute little slight of hand. If they named a specific person or group of people here, you'd risk somebody thinking,well I know that guy is full of shit or some bullshit think tank or lobbyist group isn't actually worth listening to. The vagueness leaves room for your imagination to fill in the gap with whatever "decision makers" you trust and think are important. This piece is a "bring your own convincing" sort of situation.

humanity hasn't gotten its act together to ensure survival.

Survival of what? The world made of pudding, of course.

This zinger of a paragraph-ender is already relying on the reader having fully bought in to the narrative that evil robots are coming to kill people, which is pretty bold but (I suppose) forgivable for a tone-setting introductory paragraph.

Header number two. Let's get into the meat of things!

Smarter-than-human AI is approaching rapidly

Oh no!!!!!!!!!!!!!! That's so scary!!!!!!!

When an AI called Deep Blue played chess champion Gary Kasparov in 1997, Newsweek called it “the brain’s last stand” (Deep Blue won). Twenty years later, when another AI took on the much greater challenge of playing the game of Go and beat champion Lee Sedol, one Korean newspaper reported that it caused many Koreans to turn to drink.

Chess and Go are smart person games; we culturally associate being good at these games with being intelligent. This paragraph reminds you that computers can play those games. That means that computers are intelligent. Intelligent in the way people are intelligent, even!

It additionally reminds the reader of the cultural narratives surrounding these events; remember how everybody freaked out about the computers playing games? The Koreans are turning to drink! Or, um, some Koreans, according to one newspaper report.

This is not information supporting a factual argument, it's rhetoric that supports the emotional argument, which is that you should be scared of the computer.

Let's note here that in 1997, "the brain's last stand" did not lead to the robots coming to life and killing everybody. It's almost like there is a history of people overreacting to computers they don't understand because of pre-existing cultural ideas of what constitutes "intelligence."

Today, news of AI outperforming humans in yet another field, is met with a collective shrug. AI conquered one field after another: object recognition fell in 2016, then standardized tests like the SAT and bar exam fell in 2022 and 2023 respectively. Even the Turing Test, which stood as a benchmark for “human-level intelligence” for decades, was quietly rendered obsolete by ChatGPT in 2023. Our sense of what counts as impressive keeps shifting.

Oh shit!!!!!!!!!!!!! That's scary!!!!!!!!!!!!!!!!

Today, news of AI outperforming humans in yet another field,

What news? What field?

is met with a collective shrug.

Is it? By who? Where?

AI conquered one field after another: object recognition fell in 2016,

Now, what does this mean? Since this piece has no citations at all and no bibliography or references section, I'll have to poke around and see.

Computers have been recognizing objects since well before 2016. There's a whole conference dedicated to it (Conference on Computer Vision and Pattern Recognition) that's been running since the 1980s. It ran this year, too, so it seems the field wasn't declared totally over with in 2016. The 2016 welcome address doesn't say that OBJECT RECOGNITION HAS FALLEN TO AI.

From more googling, it looks like a specific object-detection system that uses convolutional neural network was first introduced in 2016 – "You Only Look Once" (YOLO) – which, according to Wikipedia, is particularly popular and effective. Maybe that's what they're referring to? It's hard to say? A lot of things happened in 2016. The 2016 CoCVaPR featured other researchers working with/writing about neural networks, not just in relation to this one project, so who knows.

Let's set aside what they mean by in 2016, since I don't think I can really sleuth that one out. Next component: as a field, "object recognition" has been conquered by AI. What do they mean by this?

We know it isn't that "object recognition was first accomplished by a computer in 2016," so that's right out.

The field of humans recognizing objects has been taken over by AI, perhaps? That's not the case, either. The job of "recognizing objects" is as much a field humans participate in now as it was before 2016; you can't determine if a computer is doing a good job recognizing objects without looking at those objects with your human eyes, for one thing. A computer can maybe outperform humans in terms of speed, but I highly doubt that 2016 was the year computers became faster than humans at recognizing objects. That was probably much earlier.

Perhaps they mean that previously object recognition in computers wasn't AI but in 2016 it started being AI; this is a completely meaningless statement, since "AI" doesn't have a set definition that includes one form of computer object recognition and excludes another. You can call any of it "AI."

Let's just go ahead and cross this off the list as nonsense; I can't think of a meaning which isn't incorrect.

That was a very rich half-sentence! Here's the other half of the sentence:

then standardized tests like the SAT and bar exam fell in 2022 and 2023 respectively.

This one was easier to find what they're referring to; "GPT-4 performed at the 90th percentile on a simulated bar exam, the 93rd percentile on an SAT reading exam, and the 89th percentile on the SAT Math exam, OpenAI claimed." (source)

And here's the OpenAI marketing press release, which they call "research," referred to in this article.

That is an impressive technological feat, if true. The computer is able to read instructions and follow the instructions! It can take a test! Well done, computer! In this instance, "conquered" seems to mean "did pretty well at."

It's notable that despite having absorbed and chewed on the entire corpus of the internet – all of humanity's knowledge combined, some might say – it does not get things 100% correct. This is, of course, because it's not trained on information, it's trained on language. It's a large language model; the goal is natural humanlike language skills. That's how you have a computer that can't do math that well! It can mostly do math, but getting less than 100% on the math SAT as a computer is a bit embarrassing. Here's another spin on this same information: "AMAZING: this computer program is worse at math than many American teenagers."

Sorry for belittling the accomplishments of the robot. It is neat that it can take human tests.

So, OpenAI claims GPT-4 outperforms some humans on multiple choice tests. Not all humans, but yeah! Some humans! That's cool. If true. I can't think of any reason why the company selling the product would say something misleading or untrue about the impressiveness of the product they're selling, especially when it's a somewhat-improved but very expensive new version of a product they already sold to everyone who would buy it. There has certainly never been any cases of tech people lying about their products. And certainly never about AI specifically. It simply doesn't happen. I mean, that would be illegal!!!!!!! Advertisements are great sources for objective information.

So, how is it relevant that GPT-4 did pretty well on the SAT? It's important because we are once again harkening to cultural ideas of intelligence. Much like being good at chess, getting a good score on the SAT is seen as evidence of being smart. The emotional takeaway here is thus: the computer got a good score on the SAT, and therefore the computer is smart. Smart like how people are smart! Could it be.... ALIVE??

This is a chain of faulty assumptions – the SAT does not grade how smart a person is, and a computer doing well at a task does not mean it is similar to a human. But the facts here, once again, are not as important as constructing a feeling. The feeling is "the computer is smart, like a person - it's got human-level intelligence!"

Even the Turing Test, which stood as a benchmark for “human-level intelligence” for decades,

This is fun: we've come to an actual flat-out lie.

The Turing Test stood as a benchmark for human-level intelligence exclusively in the minds of "people who have vaguely heard of the Turing Test but don't know anything about it" and "science fiction TV shows." It is not a benchmark of human-level intelligence. It has not stood as such for decades. These are factually and objectively incorrect.

was quietly rendered obsolete by ChatGPT in 2023.

Sorry, I meant to say, oh no!!!!!!!!!!!! That's soooooo scary!!!!!!!!!!!

What is the Turing Test? If you are one of the many people who have vaguely heard about it but don't know anything about it, you may be curious what I mean. Isn't the Turing Test a test to see if a computer is smart like a human? That's how it's brought up in fiction, which is pretty much the only context in which it would be brought up at all.

The Turing Test is quite simple! You, a person, are interrogating two subjects: subject A and subject B. One of them is a human being and one is a computer. You ask them questions and their answers are provided to you in written form. Your task is to identify which is the human and which is the computer. If you can't tell them apart, the computer has "passed" the Turing Test.

Alan Turing came up with this idea in 1950; he can be forgiven for not having much understanding of how humans would come to interact with chatbots, the first of which, ELIZA, was invented more than a decade after he died.

ELIZA was very simple; as you'd expect, given the technology of the time. Despite the simplicity of the bot, though, a ton of people who interacted with it were dazzled by its humanity. People immediately believed ELIZA to be intelligent, understanding, and humanlike. Here's the Wikipedia article for the ELIZA effect.

The Turing Test was rendered obsolete as soon as we made machines that could take the Turing Test, because it turns out that humans absolutely love anthropomorphizing anything we can get our hands on and are very generous with the benefit of the doubt. If the computer outputs words in an order that sounds human, we will think, whoa! That computer's alive!

Because the Turing Test tests people more than it tests computers; it tests your skepticism, your concept of what "seems human," your imagination, your conversational skills.

Nothing like ELIZA existed when Turing came up with his test, and the man who developed ELIZA was surprised and alarmed by how people talked to and about the program. This type of human-machine interaction was new; the way people engaged with it was unexpected.

If Alan Turing hadn't been driven to suicide by his government's hatred of gay people, he would've been 54 when ELIZA was created. I'm sure he would've been interested in it, would have had a lot to say about it, maybe additional thoughts on human-machine interaction and what a "thinking computer" might look like. I wish the world had been kinder to him.

Anyway, perhaps the author(s) aren't lying, they just don't know anything at all about computers and are too lazy to do any factchecking at all. Is that better?

Our sense of what counts as impressive keeps shifting.

All of humanity is insufficiently impressed with AI's scores on the SAT!!!!!!!!!!!!!!!!!!!!!!!! We need to be more impressed! We need to pay more attention to the AMAZING and ASTONISHING technological advances!!!!!!!!!!!

The pace is relentless. Breakthroughs that once required decades now happen quarterly. Research labs scramble to design new, harder benchmarks, only to watch as the latest AIs master them within months.

AI improved so much so fast! This paragraph is punctuated with two examples: one is a side-by-side comparison of an AI-generated video from 2022 and 2024, both of dogs. The one from 2022 looks like janky garbage, and the one from 2024 looks like a real video clip of a dog. Pretty cool!

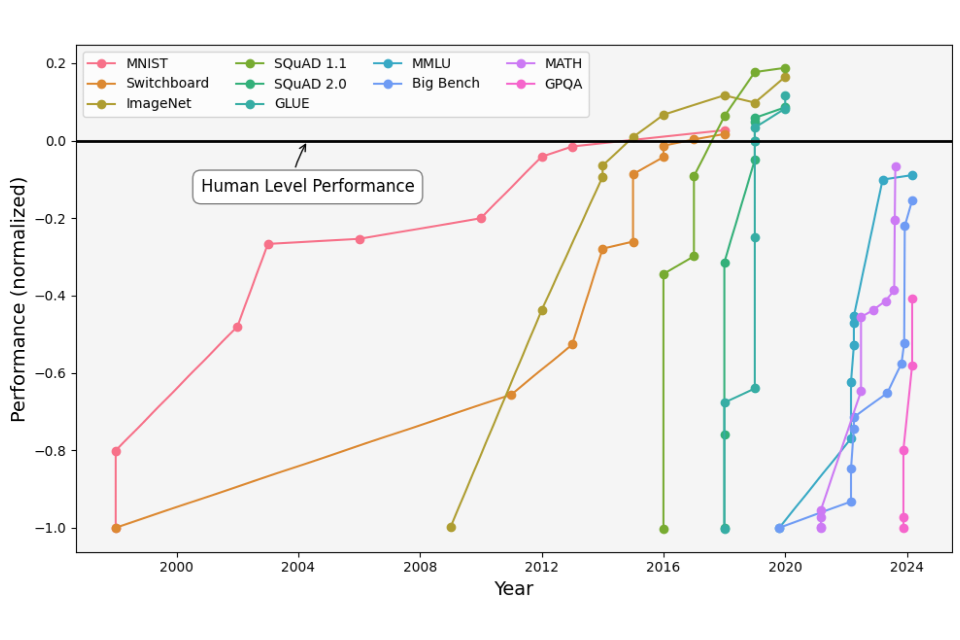

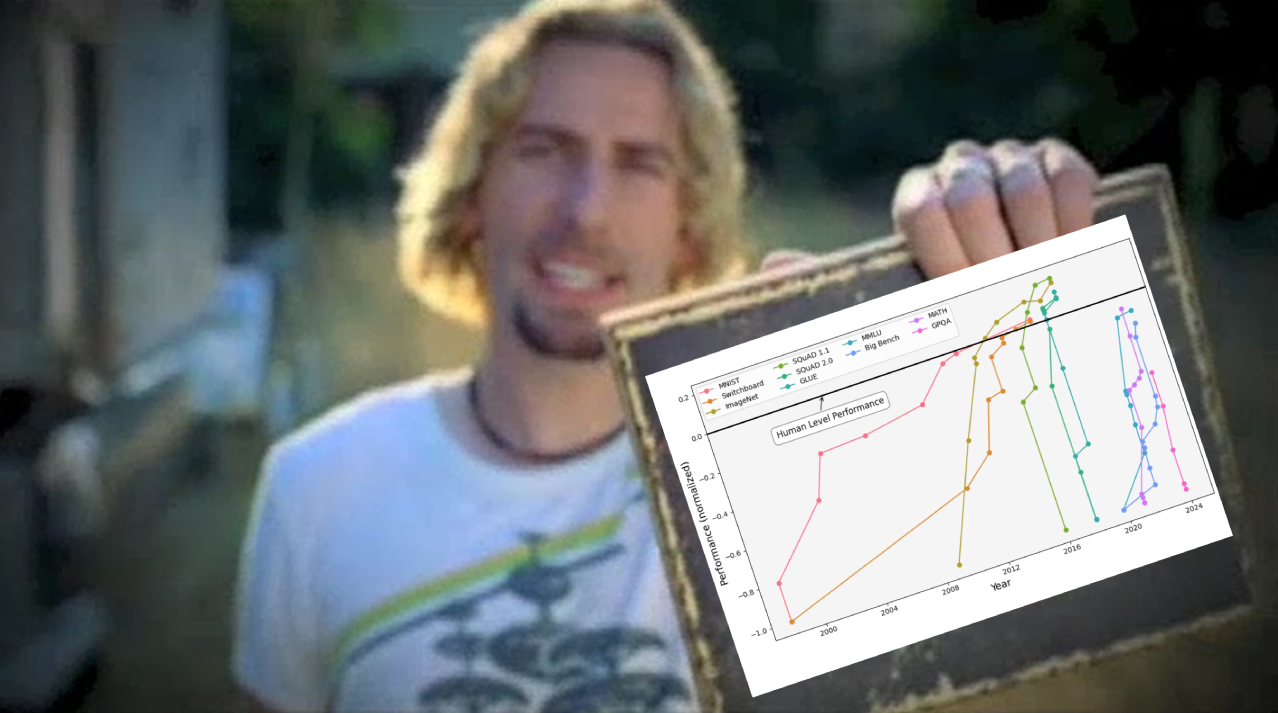

There's also a nonsensical graph! This graph is hotlinked, by the way. I thought that was funny, so I've also hotlinked it.

The graph is taken from this International scientific report on the safety of advanced AI: interim report , by the way. It's figure 2. The data is pulled from four different additional sources, and I don't really feel like doing that much research on shit that does not matter which I do not care about.

If you're curious about the interim report's conclusions, here's two snippets:

This interim International Scientific Report on the Safety of Advanced AI finds that the future trajectory of general-purpose AI is remarkably uncertain. A wide range of possible outcomes appears possible even in the near future, including both very positive and very negative outcomes, as well as anything in between.

There is no consensus among experts about how likely these risks are and when they might occur.

Pretty scary!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

Let's obey Nickelback and look at this graph. Human level performance at what? By what metric? In what context? What is this from? What humans? Don't worry about it. Look at the lines! Look how they go up!

But don't look at how the sharp increase has flattened out in every single one of these. Don't look at that part - look at the part where it goes up steeply and pretend that it kept doing that at the same pace, even though it did not, and then additionally imagine that it will keep going steeply up in the future. Actually, maybe don't look at the graph. Its job is to simply be here and be a graph.

I think it's obvious by now that there is no actual information here, only vibes. You don't need to understand this graph, what it's from, or what it's actually counting, you need to feel that AI is getting better so fast that soon it'll be better than we can even imagine.

The big prize is now Artificial General Intelligence (AGI). Not narrow AI that excels at one task, such as playing chess, but AI that can learn anything a human can learn, and do it better.

The "big prize" is for the companies I suppose, as they imagine it'll make a lot of money. So, here we go. The companies (who?) are trying to make (are they?) a computer which can learn anything a human can learn and do it better. That's a pretty big goal, and a big leap from generating cool videos of dogs swimming! Especially considering nobody understands how the systems that make the dog videos work! How are they going to achieve this massive leap forward in technology?

Tech companies like OpenAI, Anthropic and Google DeepMind are spending billions in a high-stakes race to be the first to build AGI systems that match or exceed human capabilities across virtually all cognitive domains.

First, by spending a lot of money. I guess that's a start. Probably they should try to work on, you know, understanding how the systems work, but that's something you could spend money on. Next?

People involved with these companies — investors, researchers and CEOs — don't see this as science fiction. They're betting fortunes on their ability to build AGI within the next decade.

[Bolded = italicized in the original. It's important to retain the dramatic flair.]

There is no next. They're spending a lot of money and they're using their imaginations.

Are companies actually spending billions of dollars on trying to build AGI systems? Well, there's no evidence presented to suggest as such. We are supposed to take this as a given; of course they are! It is known!

And, since they are, how could they be wrong? If people (unspecified) involved with companies (unspecified) believe that the computers are going to come to life, then you should as well. Because...................................... they're rich?

In fact, some of the leaders in this field, who have access to bleeding edge, unpublished systems, believe human-level AI could arrive in the next few years.

[Bolded = italicized in the original. It's important to retain the dramatic flair.]Leaders in the field? Who? Well, I suppose it doesn't matter. Remember, nobody understands how the systems we have already work, so they definitely don't understand the definitely real secret extra-good systems. Which are, I'm sure, very real, they're just top secret.

Experts are also saying that they have access to world-made-of-pudding technology, by the way. It's top-secret, but it's real. What, you want me to cite a source for that? What are you, some sort of fucking nerd?

What happens if they succeed? Such systems could think thousands of times faster than biological brains and we could create millions of them. They could access and process vast amounts of information in seconds, integrating knowledge from books, papers, and databases far faster than any human could. They could automate most intellectual work — including AI research itself.

Yeah, man. Imaginary purely hypothetical computers which do not exist could do anything. Because they don't fucking exist. You imagined them.

What if the world was made of pudding? Everything would be really squishy and sticky and smell bad. Really tall buildings wouldn't be possible, because pudding is not structurally sound enough to support a lot of vertical weight. Cancer wouldn't exist because you'd be made of pudding.

That last point is crucial — AGI could accelerate AI development even further, leading to superintelligent AI. Don’t think of superintelligent AI as a single smart person; think instead of the entire team of geniuses from the Manhattan Project, working at 1000x speed.

Whoa!!!!!!!!!!!!!! 1000x speed Manhattan Project????? That's so scary!!!!!!!!!!

There is no basis for any of this beyond "imagine what if this happened." It is a flight of fancy. "If a magic computer existed, it would be like this" is not an argument, because the computer only exists in your imagination. These computers are capable of anything, because they do not exist and there is no indication whatsoever that they will exist.

This is not just magical thinking, this is literally just writing fiction. I am not going to entertain this. I'm replacing "AGI" and "AI" with "magic" going forward, because they are identical here.

By the way, my uncle who works at Harvard Google told me they have fairies in a secret lab there and my other uncle who has access to the pudding-world technology confirmed it. The companies are actually planning to combine the fairies with the pudding-world tech, according to key experts in the field.

The companies attempting to harness magic openly discuss what will happen when magics surpass their creators. These aren't just corporate pipe dreams: prestigious magic researchers independent of these companies see their predictions as worryingly plausible.

The researchers who don't understand the systems, because nobody understands the systems? Those researchers?

Let's circle back to that, since it has come up repeatedly that there are definitely some unspecified but very real smart people who know what they're talking about who are sounding the alarm on magic spells.

Clearly, "nobody knows how the resulting systems work" was not true, since we're repeatedly gesturing to these experts who we are meant to understand as having secret insider knowledge and expertise on the systems.

So, it was actually the other option: this article is lying, exagerrating, or misinformed. This article is not trustworthy, and the author(s) are not interested in making it trustworthy. Facts and information are irrelevant. They are not interested in presenting you with factual information, they are interested in making you feel scared of a big scary magic computer that – I can't emphasize this enough – does not exist.

Yet our social systems, our regulations, and our collective understanding remain focused on current magic capabilities, rather than on the magic that may be developed in the coming years.

Can you believe that? Our regulations are focused on real things that actually exist instead of imaginary things that don't exist? What the hell!!!!!!!!!!!!! That's soooooo fucked up!!!! What are we going to do when someone sues me for breach of pudding contract in pudding world? Can you believe we have no laws on the books regulating the use of magic potions that turn you into a frog??????????? Not. Even. One.

What does a society look like with millions of Oppenheimers working around the clock? What will we do when there is magic that can do more or less any job far better than us, and never needs a lunch break? What happens when magic surpasses its creators both in numbers and capabilities? The truth is: we don’t know.

If

things go well, these spells could help solve some of humanity's

greatest challenges: disease, poverty, climate change, and more. But

if these spells don't reliably pursue the goals we intend, if we fail

to ensure they're aligned with human values and interests... it could

be game over for humanity.

It’s

time to pay attention.

The author(s), fully convinced that this article has you on board with its purely fictional flight of fancy, decide to get even more fanciful and start formatting the piece as if it's the back of a paperback thriller novel from the grocery store.

While they're clearly taking this seriously as a dramatic story, it doesn't seem like they're taking it seriously as anything connected to the real world that normal people actually live in. They're pretty deep in puddingworld. The article has sprinted away towards the pudding horizon, leaving me behind in the real world.

Our next section:

AI scientists are sounding the alarm

Hinges, as with basically the entire piece, on the "appeal to authority" logical fallacy. Two expert AI scientists (Geoffrey Hinton and Yoshua Bengio) are worried about the magic computers coming to life; therefore, you should be worried too. ("These aren't fringe voices — they're among the most cited AI researchers in the world.")

A highly-cited AI researcher couldn't possibly believe something incorrect or be wrong – he's highly-cited! He's an expert! As we all know, the more times you are cited the more immune you become to being wrong.

Experts form expert opinions on things based on evidence. Cranks, flimflammers, crackpots, and wackadoos form opinions based on shit they made up.

The piece includes a video clip of Mr. Hinton saying: "My guess is, we'll get things smarter than us, with a probability of about .5, in between five and twenty years from now. And if we do, there's quite a good chance they will take over. And we should be clearly be thinking about how we prevent that."

And a clip of Mr. Bengio saying: "Imagine we created a new species, and that new species was, you know, smarter than us in the same way that we're smarter than mice or frogs or something. Are we treating the frogs well?"

Evidence and facts cited: 0. Imagine and guess that something fantastical happened; isn't that worrying? Aren't you scared? What if I say a number? What if I say the word "probability" like a cartoon character with big geeky glasses?

According to my research - you can't see it, it's secret - there's actually a 85% probability of the world being made of pudding in the next 7 years. Sorry to be the bearer of bad news.

I recommend watchingAngela Collier's videos about crackpots. Alien believers, their rhetoric, and their arguments are basically identical to "the computer's alive and it's going to kill you."

The rest of the "experts" mentioned in this section are businessmen with a financial interest in convincing you their tech is better than it actually is: Sam Altman (OpenAI CEO), Ilya Sutskever (OpenAI co-founder), Dario Amodei (Anthropic founder), Demis Hassabis and Shane Legg (Google DeepMind founders), and Elon Musk (xAI founder).

I don't think I need to explain that Elon Musk is a fucking moron? I feel like that ship has really sailed. Either you know he's a blithering idiot or you are yourself deeply stupid. Or, I guess, you're a Nazi who just doesn't mind that he's an idiot because he's a rich Nazi.

"Snake oil salesman, who are of course experts in the field of medicine, all agree that snake oil cures cancer," is not evidence of the efficacy of snake oil. It's marketing. People who are trying to sell you something are still not the people you should be consulting with regard to how awesome and advanced and special and magical their product is.

These expert researchers are not communicating facts they know or information acquired through their research, nor are they examining evidence and coming to conclusions based on that evidence. They are making shit up based on things that do not exist.

There are anti-vax nurses and doctors who think that vaccines cause autism; it is fully possible to undergo specific training and achieve qualifications in a field and still believe stupid fake bullshit. If you look at evidence, you will find that vaccines do not cause autism, that the only study which ever asserted that they did was fake and debunked, and there are many doctors and nurses who believe in the efficacy and safety of vaccination.

I am interested in the real world, and care about things like evidence, facts, and information. I am not interested in what some guys trying to sell books or speaking engagements or get on TV reckon might happen based on their overactive imaginations.

As with any complex issue, there are dissenting voices. Some believe that magic is many decades away, or that we can control magic like any other technology. However, it's no longer reasonable to dismiss extinction risk from magic as a fringe concern when many pioneers of the technology are raising alarms.

I think it's actually very reasonable, because "some people are scared of it" does not make something real. You can actually dismiss things that lots of people believe if it's fake bullshit that they made up. "Lots of people think so" is not evidence.

This actually is just whining; you have to take us seriously, we're not fringe, we're normal!!!!! We're not weirdos!!!!!!!!

I remain unmoved. If you want to be taken seriously, how about you do something serious?

So,

what is it that has got these researchers so worried?

Let’s

look at what makes magic different from other technologies we’ve

created in the past, and what the potential risks are.

The article promises to start looking at some information soon. Perhaps we're returning to reality from the world of pudding.

Next header: Modern Magic systems remain fundamentally opaque

Just kidding. We're back to the assertion that noooooobody understands this magic technology, which is a bold move on the heels of an entire section dedicated to insisting that the experts know what they're talking about.

With magic potentially arriving within a decade, we need to make sure that it works in the way we intend it to. This is sometimes called the “alignment problem,” essentially: can we ensure that magic’s goals are the ones we want it to have?

Now that we all agree that this is definitely happening, we can move on! The reader is treated as if they are already convinced, as if any actual evidence has been presented. So, let's recap the evidence presented for the imminent AGI apocalypse:

- Some people think it maybe could happen at some point.

- Computers can do a lot of stuff.

- Some technologies have advanced very quickly in the last few years.

- Several companies are spending a lot of money on AI.

- Nobody understands how it works.

None of this is actually evidence of anything in particular; these things only add up to "computer coming alive and killing you" if you already believe that and are looking for things to reinforce that belief. They are not convincing or meaningful; they are a collection of loosely-related uncited facts, presented together to evoke an emotional response.

So, with this – once again – purely imaginary technology which does not exist – can we ensure that its goals are the ones we want it to have? Yeah, I think so. Just imagine that you can. Because it's imaginary.

For starters, reliably giving them goals is hard because we don’t actually know how our most advanced magic systems work.

This section is dedicated to insisting that "we" (here meaning "humanity," I think) don't understand the current functionality of programs like GPT-4. Apparently "we're not even close to understanding how these parameters work together to produce the AI's outputs."

Well, that's good news then! Turns out that not only does nobody understand this technology, we're not even close to understanding it.

So, how are we going to invent evil sentient robots if we don't understand how AI works at all? I believe the theory here is that it will simply happen somehow on its own. Billions of dollars will transform by magic into Ultron from the film The Avengers: Age of Ultron. Perhaps the fairy from Pinocchio will fly down from the sky and turn the chatbot into a Real Boy.

The trick here is to present a specific type of technological progress as natural and inevitable. Nobody needs to understand anything in order to create it; the technology is already there, waiting to be discovered, like a statue within a block of marble. The line of "progress" has been going up and so it will continue to go up. It isn't a computer program that people are working on developing, it's magic. Technology is something that just happens.

You need to believe that it's magic, because if you think of it as a computer program a person would have to create then it stops making sense in this framework. If somebody did develop an evil computer that kills everyone, their boss would probably say something like, "and how's that supposed to make me money? get back to customer service chatbots for real estate companies, you dweeb!"

This lack of understanding is a serious problem when we try to make magic systems that reliably do what we want. Our current approach is essentially to provide millions of examples of the desired behavior, tweak the spell's parameters until its behavior looks right, and then hope the magic generalizes correctly to new situations.

This piece is fun because it presents any and all failings of AI as evidence that it is just so powerful. It's like a big strong magic dragon – its power is simply so immense we pitiful humans struggle to control it! It's a bucking bronco!

A computer program that does not reliably do what you want it to do is a bad computer program. When you tell your code, "do this," and it doesn't do it, that is not you being a cowboy clinging onto a wild stallion you're going to tame and impress everyone with your big muscles; that's you doing a bad job at using the computer. It is not badass or magical for software to not work.

The argument presented here is: AI doesn't work very well, and nobody knows how it works. That means that when it inevitably comes to life, it'll be evil. This does not follow. It could just as easily do nothing at all.

The opacity of modern magic systems is particularly concerning because we're explicitly trying to build goal-directed magic. When we talk about a magic system having a "goal," we mean it consistently acts to push toward a particular outcome across different situations. For instance, a chess AI's “goal” is to win in that all its moves push toward checkmate and it will adapt to respond to the moves of its adversary.

Oh, that's interesting. We're trying to build goal-directed AI now? What happened to "not narrow AI that excels at one task, such as playing chess, but AI that can learn anything a human can learn, and do it better" ? Was that also a lie?

The definition of what "AGI" is is changing again to suit the current fear that the author(s) is trying to make you feel. Now we're talking about a totally different type of computer program, built for different reasons, as if it's exactly the same thing we were talking about before.

A chess AI is limited in the things it can do: it can read the game board and output legal moves, but it can't access its opponent’s match history or threateningly stare them down. But as magic systems become more capable and given more access to the world, they can take a much wider range of actions, and can achieve more complicated goals. And of course companies are rushing to build these broader goal-directed systems because they’ll be incredibly useful for solving real-world problems, and thus profitable.

So, now companies are all trying to build "broad goal-directed systems" to "solve real-world problems" – and, of course, solving these real-world problems will be profitable. Of course!!!!!!

What problems? None are specified, of course, because specificity is the enemy of vibes.

What real-world problems can an evil alive computer could solve that humans can't, and why would doing so be profitable?

The implication here is so astonishingly stupid that I find it difficult to believe somebody sincerely believes it. This paragraph presents the world as it exists in a children's cartoon. In order to improve The Problems – don't worry about what they are – a company needs to build a big Problem-Solver-O-Matic, and then everybody will give them money for doing such a good job with the Problem-Solver-O-Matic!

What on earth are the problems which need solving in this way? Do you think that climate change is a fucking mystery we need a computer to figure out? Racism? Poverty? Genocide? Rape? Income inequality?

Do you really, genuinely, believe that "the companies" will solve problems if only they knew how?

How the fuck do you think the problems got there in the first place, you stupid motherfucker?

No, no; of course I'm coming at this all wrong. "Problems" are like technology; they're naturally-occuring, simply spawning spontaneously out of the ether, and have nothing to do with anything else. Life is like a video game! We just need to keep unlocking more things in the tech tree, then apply ITEM to PROBLEM to COMPLETE THE QUEST.

I'm sorry for getting heated. Please, let's continue hearing about the magical alive future computer that's going to solve all the unspecified problems.

And

here's where the danger emerges: there's often a gap between the

goals we intend to give magic systems and the goals they actually

learn.

Even

in fairly simple examples, the magic can be unhelpfully “creative”

when it comes to its goals. Researchers attempting to build a magic

that could win at a boat racing game trained it to collect as many

points as possible so that it would learn to steer the boat to

victory. Well, it turns out that in this game, spinning around in a

circle and crashing into things gets you points as well, so the boat

ignored the race entirely!

What do you mean, "it turns out"?

This is presented as though it's an astonishing discovery on the part of the computer rather than an obvious oversight by the researchers. This sounds like a very poorly put-together study!

That's because it's not a study, it's more marketing materials from OpenAI.

OpenAI and, by extension, this article, want the reader to think: "Whoa! The computer thought of something that even the smart genius researchers didn't notice! It really is smarter than humans!" But I do not think that.

I think employees of OpenAI made a bot designed to get a lot of points in a game, and the bot they made got a lot of points in the game. It was not "creative."

Am I meant to truly believe that the employees of OpenAI did not understand how points were acquired in this flash game? This game which they programmed a bot to play?

Are the researchers at OpenAI so bad with computers that, to them, a free flash game is just as complex and unfathomable as a neural network? If so, could it perhaps be possible that they are just fucking stupid?

Or consider how OpenAI trained ChatGPT to avoid unsavory responses. Days after release, users circumvented these safeguards through creative prompting.

How is this related to the boat example? It isn't, except in the extremely broad sense of "goals." Interesting that both of these are examples of OpenAI employees being bad at their jobs, and that they are both presented as if they are evidence of how powerful and amazing AI is.

Despite significant research efforts, alignment remains stubbornly difficult.

By using the word "alignment," we can say that "AI isn't very good at anything" is actually an indication that it's evil and up to no good rather than an indication that it's a computer program that isn't very useful. This is a very funny thing to do (the car won't start? alignment problem. it's probably evil. oven takes forever to get up to temp? probably evil.) but not a convincing argument that the computers are going to come to life soon.

I am convinced that employees of OpenAI are bad at using computers, though. We've seen some solid evidence of that.

And as magic capabilities grow, the consequences of deploying misaligned magic become increasingly severe.

I agree that it is bad when important things get broken by the useless computer program that can't do anything right. "It doesn't work properly" is not, however, evidence that it is sentient or going to be sentient soon.

We come to our penultimate header:

The stakes rise with system capability

This, and the previous paragraph, are doing a little bit of sleight of hand here with "capability." The systems are not necessarily more capable, but they are advertised as such and being improperly used for things which they are not suited.

Subtle misalignments between what we intend and what magics optimize for are already present in our world. Social media wasn't built to damage teenage mental health; it was built to maximize engagement. The harm wasn't the goal; it was collateral damage from reletless optimization for something else entirely.

Here we see "AI" being used much more broadly all of a sudden; now social media is AI. Alright, sure! Fine by me.

There are a few assertions packed in here: 1) social media damages teen mental health, 2) social media was built to maximize engagement, 3) any harm inflicted upon teens' mental health was an accident.

I will start with my pettiest of pet peeves, which is the persistent usage of the term "social media" to mean "Facebook and Instagram and Tiktok," which is not what the term actually means. "Social media" is an extremely broad term which encompasses any and all media through which users can create and share anything. Youtube is social media. Slack is social media. Flickr is social media. Usenet, Yelp, and Fortnite are social media. If you are using the term to mean something specific then you should actually explain what you mean and not simply assume that everybody will intuit psychically which handful of websites you're actually referring to.

Okay. Social media damages teen mental health. Is that true? It once again falls upon me to put together this article's bibliography for it, since the author(s) couldn't be bothered to do it themselves. This is, by the way, extremely annoying. If I were grading this piece I would be eperfectly justified in flunking it without even reading it since it doesn't cite its sources.

I don't feel like doing a ton of research, so I'll look at two overview-type pieces:

Online communication, social media and adolescent wellbeing: A systematic narrative review is the first one I found, but it's a little old (2014) so I grabbed a second one that's more recent to compare; Social Media and Youth Mental Health: Scoping Review of Platform and Policy Recommendations .

The verdict: "social media" is a really broad term that encompasses an extremely wide variety of things. No actual causal link between "social media use" and "damaged mental health" has been found. Results of studies are extremely mixed; since different studies are looking at different things it's very hard to say anything for sure.

There is some evidence indicating an association between more screentime and depression/anxiety, but nothing indicating that the screentime caused the depression/anxiety. As someone with both depression and anxiety, I humbly suggest that spending more than 6 hours a day playing video games is a symptom of depression and not a cause. Just my 2 cents.

There are specific things on social media that can cause harm to a teen's mental health: you will probably not be surprised to learn that cyberbullying, pro-eating disorder content, and child predators, for example, are harmful. It's bad when bad things happen to you. Similarly, exposure to antivax misinformation does make people less likely to get vaccinated. These are things that a teen might encounter on social media, though they aren't guaranteed to. A kid who spends three hours a day snapchatting her boyfriend and then looking up Yelp reviews for the local McDonald's is not having the same "social media" experience as a kid spending 10 hours a day scrolling through TikTok, you know?

So, that's a big academic shrug and an "ask more specific questions."

There is some evidence that links dark patterns like algorithmic feeds and infinite scrolling with negative mental health outcomes and, in particular, addictive/compulsive behavior and disrupted sleep due to staying up scrolling instead of going to bed properly. Which brings me to points 2 and 3.

It is certainly true that some social media is currently designed to maximize engagement, which is not what the article actually said but is probably what they meant. (I am being generous here. You're welcome.)

Is the harm caused by maximizing engagement "collateral damage," though? Debatable. I don't think so; the damage and the target outcome are the same thing. The goals are "keep scrolling forever" and "feel emotions, such as anger and fear, which prompt engagement"; these are also the harms. That's not really an "accident" or "something else entirely."

More broadly, I think it is once again so far beyond naive that it lands firmly in "stupid motherfucker" territory to believe that any harm caused by (for example) Facebook is an accident.

Echo chambers and misinformation drive violence, but they also are a consequence of, and in turn drive, user engagement. This fundamental tension at the heart of Facebook’s business model has been known to its management since before the genocide. It is put most starkly in a leaked memo from former Facebook Vice President Andrew Bosworth. He wrote “We connect people. Period. That’s why all the work we do in growth is justified. . . That can be bad if they make it negative. Maybe someone dies in a terrorist attack coordinated on our tools. And still we connect people. The ugly truth is that we believe in connecting people so deeply that anything that allows us to connect more people more often is *de facto* good.”

sourceThe technology is not "misaligned." It is working as intended. The purpose of a system is what it does.

The consequences of misalignment in today’s magics are usually manageable — an incorrect recommendation or an inappropriate response. We can mostly shrug these off.

Oh, never mind. Social media isn't AI, they just put in a completely unrelated anecdote to fill space. That, or damage to teen mental health is something we can shrug off? Why bring it up, then? (The answer is, again, to prompt emotional reactions like "worry" in the reader).

But this won't remain true for long. The stronger the optimization, the more it pries apart the gaps between the target and what we want.

Oh no!!!!!!!!!!!!

The more you optimize AI, the worse it gets at doing its assigned tasks! Because the optimization is so strong!

If left unchecked, this could ultimately lead to our extinction.

OH NOOOOOOOOOOOOOO!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

Computers are so scary! If you optimize them too much, they'll get so bad at performing tasks that they'll kill all humans!

How is this true? What do they mean by this? What does "optimize" mean if it somehow makes the computer worse? How could this possibly actually happen? How does this follow? Do. Not. Worry. About. It.

Once again this is operating on emotional logic rather than, you know, things that exist in reality. It would be pretty fucked up if you somehow "optimized" a computer so much that it came to life and killed you. How would you accomplish this amazing task? Dunno, but it'd be fucked up if it happened.

We once again have to nod along with the assumption that at some point a fairy is going to appear and cast a magic spell on the computer that fundamentally transforms it into a magical evil guy.

Human values are intricate and nuanced. Almost every goal that magic could optimize for would, when pursued to the limit, fundamentally conflict with what we care about in some important way.

[Bolded = italicized in the original. It's important to retain the dramatic flair.]Oh, really? Like what?

Like what, though?

Hey. Hey, come here. Come here and look at me.

The film Avengers: Age of Ultron is not a documentary.

Ultron is not real. Tony Stark is not real. It's a comic book movie for children. You have to understand that. I am begging you to understand that comic books are not real life.

I'm running out of ways to say that this is fucking ridiculous. Computer programs aren't mischevious genies and you cannot "optimize" so hard that they become mischevious genies.

Following this, the article once again launches into full-on fiction writing, which I do not care to indulge because it is stupid. I'm glad they're having fun and being creative and going on a little imagination journey in their mind palace. Perhaps the authors should consider signing up for a writing group at their local library.

I shall replace this segment with one of equivalent value pondering the IMMINENT RISKS AND COMPLICATIONS associated with the world made of pudding.

If the world was made of pudding, summer would be absolutely unbearable, wouldn't it? Everything would be so gooey. I suppose winter wouldn't be so bad, although since we would also be made of pudding we'd need to be careful there. Man, wouldn't it be hard to balance dressing warm enough to prevent our pudding-bodies from freezing but not so warm that we become fully goo?

In puddingworld, would we even exist as "ourselves", though? Or would the world just be one continuous pudding? Would there be as many types of pudding as there are types of things in our world, or just different flavors? And after all, pudding is pudding. It'd all melt together, surely.

Okay, we're back for the final section of the piece:

The dangerous race to smarter-than-human AI

If magic companies succeed in building magic (and beyond) in the next decade, that does not leave us a lot of time to solve alignment.

Big if. Get it?

And here’s the problem. While companies pour billions into making magic more capable, safety research remains comparatively underfunded and understaffed. We're prioritizing making magic more powerful over making it safe.

Ahhhh, there it is! We've come to "the problem." The problem is, of course, that nobody is giving the "AI safety researchers" billions of dollars! They're giving OTHER PEOPLE billions of dollars!

The end of this piece has a "what can you do to help" call to action, and one of the ways you can help is by donating. Donating to who? Effective altruists, of course.

Effective altruists are really good with money, I hear. If there's anything thing I know about effective altruists, it's that you should definitely give them your money and they're super honest.

But I'm getting ahead of myself. This piece offers one entire paragraph that isn't completely incoherent nonsense:

Companies face intense pressure to deploy their magic products quickly — waiting too long could mean falling behind competitors or losing market advantage. Moreover, magic companies continue pushing for increasingly autonomous, goal-directed systems because such agents are expected to create far greater economic value than non-agentic systems. But these agents also pose significantly greater alignment challenges: they are inherently harder to align because they have a lot more ways they can act in the world we couldn’t possibly have tested for, and their failures are much more dangerous since they can autonomously take harmful actions without human oversight. This creates a dangerous situation where magic systems that are both very capable and increasingly autonomous might be deployed without being thoroughly tested.

I remain amused by their insistence on using "alignment" to mean "functional." But yes, if a poorly-made program is not tested properly, doesn't work, and is then put in charge of something without any human oversight, that would be very bad. It's also much more likely than a computer program being granted sentience by the Pinocchio fairy and pushing a big red button that says KILL ALL HUMANS, since it is something that happens all the time. Currently, AI doesn't really work that well and keeps being put in charge of things with little or no human oversight, and it has been going pretty badly.

Don't worry, though; the ability to coherently communicate about things that might actually happen vanishes immediately. Here's the next paragraph:

If those magics are superhuman and have misaligned goals, they probably won’t let us go “oops, my bad, let me make another version.” Instead, they’ll take over control of the future from humanity.

What does that mean? What does "superhuman" mean? What misaligned goals? In what way will they "take over control of the future"? What would that look like? Don't worry about it; the answers are not important.

It's interesting that in this fantastical future humans are rendered completely helpless in the hands of the evil AI. In the real world, you can turn a computer off. Or hit it with a hammer. But since it's a completely fabricated sci-fi future with an evil robot, I suppose that's pretty easy to get around, right? The evil AI invents forcefields! It summons three waves of enemies that attack you and soak up your ammo! It becomes a cloud of nanomachines and flies into your eyeballs and takes over your brain! Oh shit!!!!!!!!!!!!!!!!!!!!!!!!!

Many employees at the companies building these magics think that everybody should slow down and coordinate, but there are currently no mechanisms that enable this, so they keep on racing towards ever more powerful systems.

It's too bad we don't have some kind of... I don't know, electronic mail? Or some kind of instant messenging... some way to communicate and coordinate with each other... sadly, that's just not possible. Obviously they'd do it if it were possible, because companies are good and always do good things. It's just that there's no way to do it.

The article meanders into another flight of fancy which is similar to all the other ones. What if the world was made of pudding?

In the world made of pudding, I'm imagining Jelly World from Neopets, where it's like the world as it is now but everything is pudding. Like, I'm a human made of pudding typing on a laptop made of pudding. In the pudding world we'd have some interesting ideas about what is and isn't "food," though, since it's all pudding. Maybe "food" pudding has a different texture or flavor than "non-food" pudding? Or perhaps the difference is entirely cultural, like our real-world ideas about which animals are acceptable to eat.

If the world was made of pudding, how is anything alive? It's pudding? Are souls real in this world? Are souls made of pudding? The companies (unspecified) are not answering these questions, despite the concerns being raised by highly-respected intellectuals (me) in the field (imagining the world made of pudding).

After our flight of fancy, we come to the end of the article and the call to action. It suggests you read some more of their great articles or perhaps give somebody some money! I recommend not doing that, because it's fucking stupid.

This concludes my journey into the wonderful world of AI safety.